Every year, Global Witness works with partners in the Kick Big Polluters Out coalition to identify fossil fuel lobbyists who are attending the COP climate talks. For COP29, we pioneered an AI-assisted approach to the process that streamlined and strengthened our analysis

Every year, as part of the Kick Big Polluters Out (KBPO) coalition, Global Witness helps identify fossil fuel lobbyists who register to attend the COP climate talks.

These are well-attended events – 52,305 people signed up for COP29 in Azerbaijan, and 81,027 registered the year before.

The UN Climate Change Conference publishes the list of attendees and their organisational affiliations a few days into the event. Classifying individual attendees would be time-consuming and unnecessary. Instead, we focused on classifying organisations that might include multiple attendees.

That still leaves us with about 20,000 entries in the past year, each of which must be manually researched and classified by a KBPO volunteer – a laborious and time-consuming ordeal.

To speed this process up, we have trialled the use of generative AI to do some pre-classification for us.

The accuracy problem

By default, Large Language Models (LLMs), like the ones that power ChatGPT, have a problem with the truth.

When prompted with a question, particularly one that refers to a very specific and not well-known topic, an LLM can "hallucinate" – producing a plausible-sounding but completely fabricated answer.

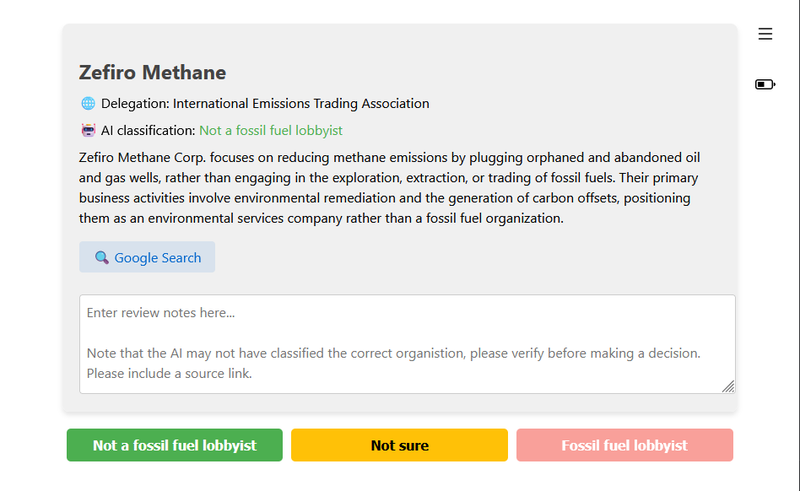

For example, ask Claude (a ChatGPT competitor) to tell you about a company like "Zefiro Methane", which has sent three delegates to COP29, and it will say it could be a "research initiative focused on studying methane emissions." The company, in fact, specialises in methane abatement.

The AI even warns that it "might hallucinate details" as it "does not have concrete, verifiable information about Zefiro Methane."

To mitigate this issue, many LLMs have been paired with search engines.

If you ask ChatGPT to tell you about Zefiro Methane, it searches the web, reads a few of the resulting pages, and then returns a much more accurate response.

This approach, sometimes called Retrieval Augmented Generation (RAG) or grounding, works by attaching relevant texts from company websites, news articles or other sources to the question that the AI must answer. This tends to produce more accurate results.

Classifying at scale

To do this at scale, we developed a tool to search the internet for each of the 20,000 organisations registered to attend COP29, collect the results, and then capture relevant text from those results.

One challenge with this approach is that many websites use CAPTCHAs or other methods to prevent bots from scraping their pages.

To increase our chances of getting as much data as possible, we routed our requests through Oxylabs's Project 4beta, which significantly reduced these roadblocks.

The resulting texts were then combined and sent to one of OpenAI's generative AI models (gpt-4o-mini) along with a description of what we consider a fossil fuel lobbyist. The AI model then used that information to tell us whether it thought the organisation is a fossil fuel lobbyist, along with an explanation and sources.

Because AI models cannot be fully trusted to produce accurate results, KBPO volunteers still classified each organisation by hand. Each positive classification was then fact-checked for a second time by another volunteer, as we were especially concerned about the risk of accusing a company of being fossil fuel-affiliated.

When does it work, and when does it not?

Overall, this approach produced reliable results, correctly classifying organisations in most instances.

In a small number of cases, misspellings or data entry errors in the original dataset meant the AI couldn't correctly identify and classify the organisation.

The tool we developed also struggled with short or generic names that could refer to multiple organisations. For example, AFD could stand for Canadian fuel supplier AFD Petroleum, but also for the German far-right party Alternative for Germany or the French Development Agency.

In these instances, the search results may prioritise results about one organisation, which will not be the same organisation that has sent delegates to COP.

In early tests, the AI also had a tendency to interpret our definition of a fossil fuel lobbyist too broadly, leading to a higher rate of false positives. Investors and consultants with close ties to the fossil fuels industry were classified as fossil fuel lobbyists, though they didn't quite fit the definition we were using.

We were able to mitigate most of these misinterpretations by providing examples of edge cases with each prompt.

What's next?

The approach described here can be used to automate or speed up research beyond the classification of COP delegates.

Researchers and journalists could use tools like these to automate tedious tasks that would take days or weeks to do by hand. For example, they could identify ultimate corporate ownership of various companies, find and summarise academic papers, find potential conflicts of interests or scrutinise public spending.

As LLMs are becoming more intelligent and affordable with each new iteration, they also become more useful for research tasks.

Nevertheless, there are still fundamental issues with how AI approaches the truth that don't have an obvious solution in the near future. In situations where accuracy is critical, AI outputs still need to be verified and fact-checked by humans.

We will build on our experience in the coming year, and we hope to make the results of our work available for others to use.