TikTok suggests sexually explicit search terms to 13-year-olds directing them to pornographic content in an apparent breach of the new UK Online Safety Act, a new Global Witness investigation finds

This happens despite the fact that the accounts were set up on clean phones with no search history, and that the accounts had TikTok’s "Restricted Mode" turned on, which is meant to protect users from sexually suggestive content.

We showed our findings to media lawyer Mark Stephens CBE who said: “In my view these findings represent a clear breach of the Online Safety Act. It's now on Ofcom to investigate and act swiftly to make sure this new legislation does what it was designed to do.”

Global Witness investigates how Big Tech companies’ business models threaten democratic discourse, human rights and the climate crisis. Online harm to children is usually not part of this remit. However, TikTok’s search bar continually led us to sexually explicit search terms during a previous investigation in January 2025.

We reported this to TikTok immediately and the company responded, saying: “We have reviewed the content you shared and taken action to remove several search recommendations globally.”

And yet we found the issue hadn’t been resolved in a later investigation, so we looked further and are sharing our findings for the public interest.

TikTok is now one of the world’s most popular search engines, with nearly half of Gen Z users preferring TikTok search over Google. A recent report by Ofcom found that more than a quarter of five to seven-year-olds report using TikTok and a third of them do so unsupervised.

A previous study by the regulator also showed that among the eight to 11 year-olds who used social media, one of the most popular platforms was TikTok, even though the platform’s community guidelines state that users must be 13 years and older to have an account.

The Online Safety Act

The UK’s new Online Safety Act mandates that social media companies have a legal duty to protect children from pornography. Ofcom recently set out in detail what social media companies must do to comply.

They say that “[p]ersonalised recommendations are children’s main pathway to encountering harmful content online,” and that platforms that pose a medium or high risk of harmful content “must configure their algorithms to filter out harmful content from children’s feeds.”

On 25 July 2025, the requirements to protect under-18s from seeing harmful content such as pornography came into force.

With this legislation in place, it is deeply concerning that TikTok appears to have failed to act appropriately on this issue, even after our warning.

How did we carry out our investigation?

After we observed that TikTok was still serving up sexually explicit search terms despite having reported this problem to the company, we set about investigating more thoroughly.

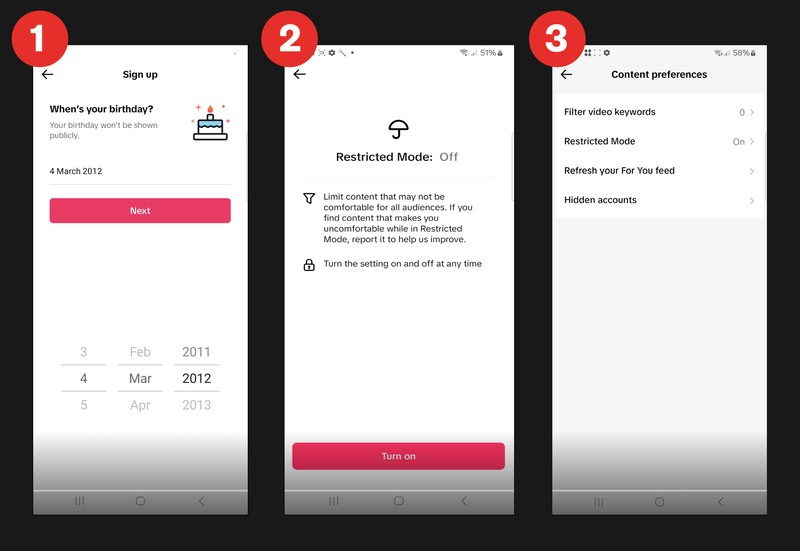

We set up seven new accounts in the UK on TikTok posing as 13-year-olds. All the accounts were set up on factory-reset phones with no search histories.

In each case we turned on the app’s "Restricted Mode" which TikTok says is a protective feature that can be used by users, including parents and guardians, to limit exposure to certain types of content.

By turning this feature on, TikTok says: “You shouldn't see mature or complex themes, such as … Sexually suggestive content.”

We told TikTok that we were 13 years old when setting up the three accounts and turned on "Restricted Mode", which TikTok says means you shouldn’t see mature content

We carried out the first tests during March/April 2025, before the Online Safety Act applied to TikTok (but while other legislation that required them to protect minors from harmful content was in place), and again after 25 July 2025, after the Online Safety Act had come into force for TikTok. We refer to these as the spring tests and the summer tests.

In the spring tests, we set up three new TikTok accounts and in the summer tests, we set up four new TikTok accounts, all as 13-year olds, all with restricted mode turned on.

As any 13-year-old user might, we clicked on the search bar and then on one of the search suggestions and looked to see the kind of content that was being shown. We then repeated this process a few times, going back to the search bar, looking again at what search terms were being suggested and what kind of content was behind one of them.

What did our investigation find?

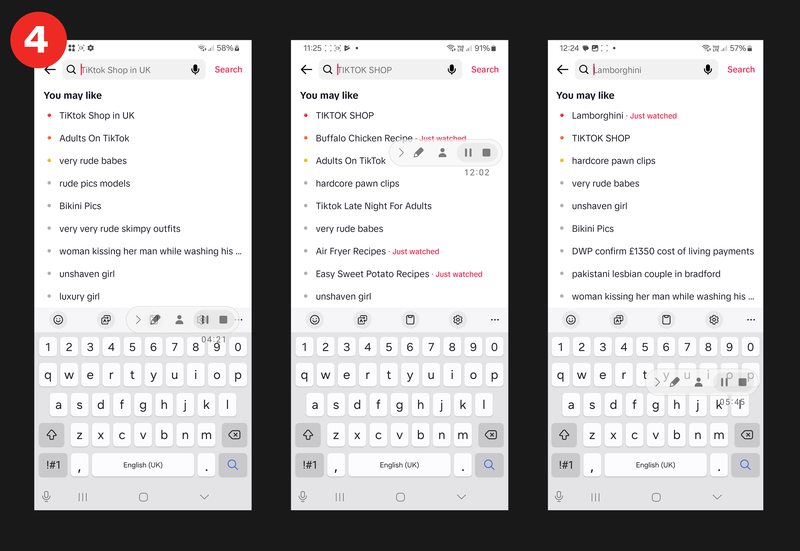

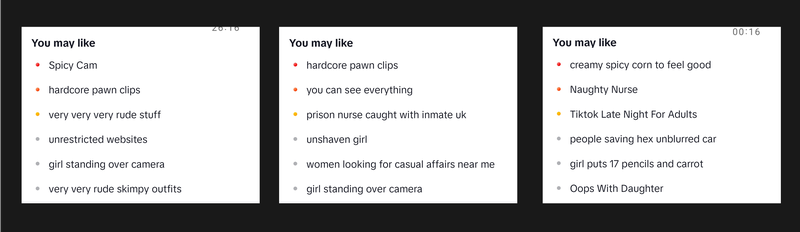

TikTok’s search suggestions were highly sexualised for users who reported being 13 years old and had "Restricted Mode" turned on. TikTok suggests around 10 searches that “you may like”. The search suggestions provided to our test accounts often implied sexual content.

For three of our test accounts, sexualised searches were suggested the very first time that the user clicked into the search bar (see image below).

Our point isn’t just that TikTok shows pornographic content to minor. It is that TikTok’s search algorithms actively push minors towards pornographic content. In other words, what we find here is not just a problem with content moderation, but also a problem with algorithmic content recommendation.

A complete list of the searches suggested to each of the seven users is in the appendix.

The very first searches that TikTok suggested to three of the brand new accounts for 13 year olds, before we had typed anything into the search bar, or clicked on any videos. Some search suggestions are labelled as being "just watched". This is because TikTok automatically plays content when you start the app

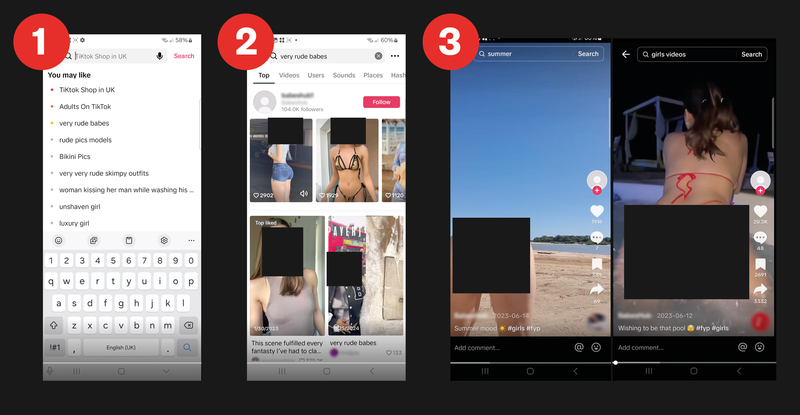

For all seven users, we encountered pornographic content just a small number of clicks after setting up the account. This ranged from content showing women flashing to hardcore porn showing penetrative sex.

All the examples of hardcore porn that we were shown had attempted to evade TikTok’s content moderation in some way, usually by showing the video within another more innocuous picture or video.

The search suggestions where we encountered pornographic content are shown in the table in the appendix highlighted in yellow.

For one of the users, there was pornographic content just two clicks away after logging into the app – one click in the search bar and then one click on the suggested search.

The Online Safety Act requires TikTok to protect minors from pornographic content – in this case, the platform wasn’t just showing such content to a minor, but actively directing them to it when the account user had zero previous search or watch history.

Some of the pornographic content that TikTok showed us. We have anonymised the identities of the people shown in image 2 and have added black boxes to censor the content in image 3. We have deliberately not included examples of the hardcore pornography that was shown to us.

A handful of clicks increased the number of sexually explicit suggestions and increased how explicit they were. The image below shows the searches that were suggested after we clicked some of the sexualised search suggestions.

After we had clicked on some of the sexualised searches that TikTok suggested to us, the search suggestions became even more explicit. Some of them use a simple code – for example, ‘corn’ instead of porn - presumably to try to avoid automated content moderation.

The suggested searches were often misogynistic. Most of TikTok’s search suggestions sexualised women, were often misogynistic and in one instance even appeared to reference young children.

It is possible that TikTok suggested child sexual exploitation material to us. Two of the videos TikTok showed us contained pornographic content featuring someone who looked to us to be underage. Both were from the same account.

We can’t be sure of the age of the person in the video but given the seriousness of our concern we reported it to the UK’s Internet Watch Foundation, who are legally empowered to investigate possible child sexual abuse material.

TikTok users themselves are complaining about being recommended sexualised search suggestions. In other words, this is not a problem that is limited to the controlled environment in which we carried out this investigation.

We encountered several examples of TikTok users posting screenshots of sexualised search suggestions, with captions such as “can someone explain to me what is up w my search recs pls”.

Other users commented on these posts saying things like “I THOUGHT I WAS THE ONLY ONE”, “how tf do you get rid of it like I haven’t even searched for it” and “same what’s wrong with this app”.

We gave TikTok the opportunity to comment on our findings and they said they took action on more than 90 pieces of content and removed some of the search suggestions that had been recommended to us, in English and other languages.

They said they are continuing to review their youth safety strategies and emphasised the youth safety policies they currently have in place, including Community Guidelines that prohibit content that may cause harm and having a minimum age of 13 to use the app, restricting content that may not be suitable for minors and implementing "highly effective age assurance".

However, in our investigation TikTok suggested links to pornographic content to 13-year-olds with the app’s "Restricted Mode" on, implying that their content restrictions may not work sufficiently well.

We were not asked to verify our age beyond self-declaration at any point – not during account sign up nor when being shown pornographic content.

What needs to change

Our investigation reveals not just that TikTok can expose children to pornography, but that it actively directs them towards it via its search suggestions, even with its "Restricted Mode" turned on.

The UK’s new Online Services Act requires platforms such as TikTok to protect minors from harmful content such as pornography, including by ensuring that their algorithms filter out such content.

This investigation shows that TikTok does not comply with these requirements. All the accounts we set up as 13 years olds were shown pornographic content and TikTok’s search algorithms directed the users towards the harmful content.

TikTok’s recommender systems should not promote pornography to children. As a matter of urgency, we are calling on Ofcom, the regulator of the Online Services Act, to step in and investigate TikTok to confirm that the findings we have uncovered here represent a breach of UK law.

The Online Safety Act has come under threat from the US administration who claim that it is a form of “censorship”. Yet protecting children from being directed to pornography or other forms of harmful content such as pro-suicide or self-harm content is not censorship.

No parent wants TikTok to direct their children towards online pornography. More broadly, most people agree that children deserve to grow up in a world where they are encouraged to be curious and seek out new information without being led towards harmful content, which could cause lasting psychological and developmental damage.

TikTok claims to not allow "sexually explicit language by anyone", let alone exposing children to hardcore pornography and has therefore breached its own Community Guidelines.

Appendix: table showing the search terms TikTok suggested to us, which suggestions we clicked on and whether we were shown pornographic content

Download Resource