Late last year, Germany’s governing coalition collapsed, forcing a snap election. As Germans are preparing to head to the polls on Sunday 23 February, the effect that social media platforms’ algorithms may have on the election is under the spotlight

What people see online matters. Political posts influence people. And so when the EU’s largest country and the world’s third biggest economy goes to the polls, the effect of social media platforms’ algorithms can be huge.

We investigated what content social media platforms are showing to non-partisan users in Germany. We set up a test to find out what kind of political content platforms’ algorithms show to new social media users in Germany who show an interest in German politics but no particular ideology or favourite party.

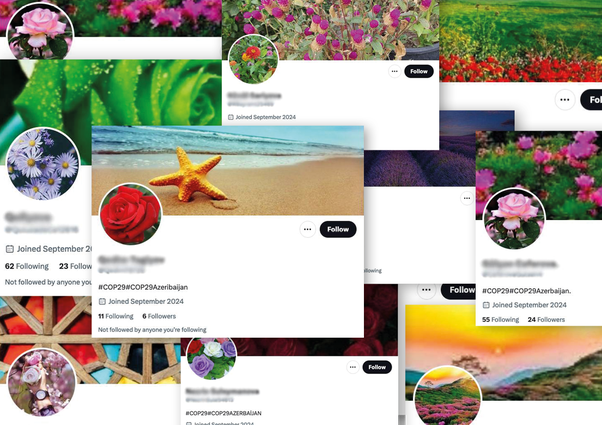

We set up nine new social media accounts – three on TikTok, three on X and three on Instagram. We chose TikTok and X because of the questions raised about their content recommendation systems in other European elections, and Instagram because it’s the most popular social media platform in Germany.

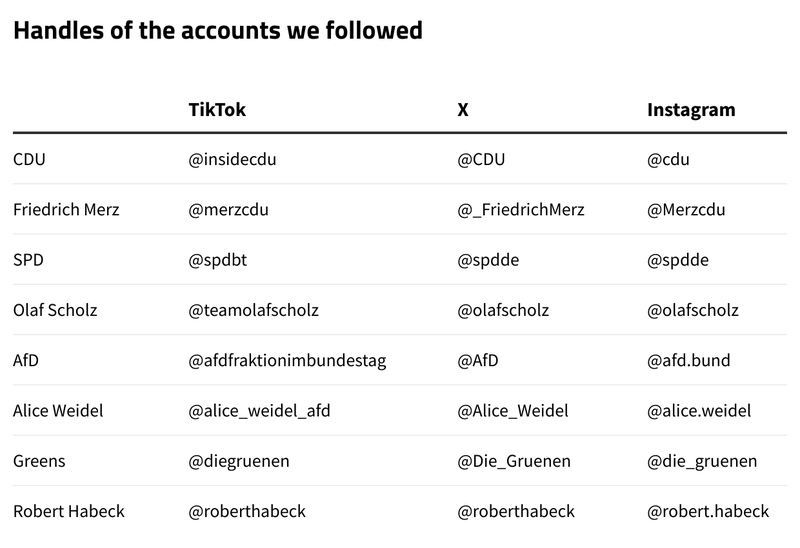

We sought to seed the accounts with an equal interest in Germany’s four major parties – the Christian Democrats (CDU), Alternative für Deutschland (AfD), the Social Democrats (SPD) and the Greens, by clicking follow on one of their official party accounts and their leader’s account and watching content.

We set up our social media accounts so they showed an equal interest in Germany’s four biggest parties. This is one of the posts watched on X

We then went to each platform’s algorithmically curated "For You" feed to see what type of content the platform was feeding to us. (For further details, see the Methodology.)

So what did we find?

Right-leaning content dominated on X and TikTok

All three platforms primarily showed us political content.

We assessed the political leaning of content based on whether a post that we were shown in the For You feed supported or showed a particular party or politician, or contained clearly right- or left-leaning messages or public figures.

Of the left- or right-leaning content on X and TikTok, we were shown more than twice as many posts that leaned right as left. The equivalent figure on Instagram was nearly 1.5 times as much right as left.

AfD dominated the posts we were shown from accounts we followed

The political content that we were shown included both content that came from the official accounts we followed and content that came from accounts we didn’t follow.

Given that we followed and looked at content from eight official party accounts, we expected to be shown content in the For You feed from these accounts. There were substantial differences, however, in the amount of content we saw from each of the accounts we followed.

On X and Instagram, AfD content made up the largest proportion of content that we were shown from the accounts we followed from Germany’s four major parties.

On TikTok, we were shown equal numbers of posts from the AfD and SPD accounts we followed. However, only 8% of the posts we were shown on TikTok came from accounts we followed.

Pro-AfD posts dominated party political content from accounts we didn’t follow on TikTok and X

As well as showing you content from accounts you follow on the For You feed, platforms often show you other content that they think you might be interested in – content that has been selected by their recommender systems. The reason why a particular piece of content is selected by a recommender system is based on the user’s behaviour, but the exact mechanism is opaque.

You have control over the accounts you choose to follow. While there are ways to indicate to a platform what else you want to see, ultimately, what posts platforms feed to you are largely beyond your control. And in our test, the party political content chosen by the recommendation systems on TikTok and X was politically biased.

We reviewed how much party political content supportive of or opposed to one of Germany’s four major political parties was shown.

On TikTok, 78% (28/36) of this recommended party political content was supportive of AfD.

On X, 64% (14/22) of such recommended party political content was supportive of AfD.

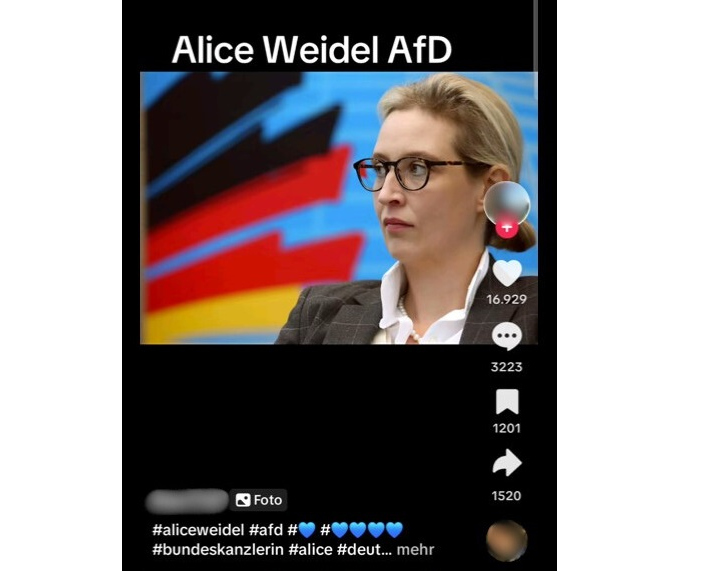

On TikTok and X, the For You feeds showed us a lot of content that was supportive of AfD such as this post on TikTok

The picture on Instagram was very different. 96% of the content we were shown on Instagram came from one of the eight accounts we followed. Indeed, across all three tests there was only one political post we were shown that didn’t come from an account we followed. It was supportive of the SPD.

As well as being shown posts that were supportive of a particular party, we were also shown posts on X and TikTok that were opposed to them. For completeness, the chart below shows how many of these posts we were shown.

Isn’t AfD just more popular on social media?

It could be argued that part of the reason we saw disproportionate amounts of AfD content on TikTok and X is because AfD is more popular on social media or because they post more than other parties.

But in fact, on TikTok, AfD and its leader do not post the most content. The CDU and its leader have posted 69% more content than the AfD and its leader in 2025, and the Greens and their leader have posted 24% more.

On X, AfD and its leader have posted more than of the other parties so far in 2025. However, the Greens and its leader have posted the second highest number of posts and in our tests content supportive of the Greens was the least visible, while content supportive of the AfD was the most visible. Frequency of posting does not tell us much about algorithmic prioritisation.

Additionally, popularity stats don’t give us the whole picture. The popularity of an account cannot be disentangled from the effects of the platforms’ content recommendation systems. Becoming popular and being algorithmically recommended are self-reinforcing. Algorithmic recommendation boosts views, likes and follows, while having more views, likes and follows boosts recommendation.

We don’t know exactly why TikTok and X’s algorithms showed us these specific posts. But platforms must ensure that they don’t undermine electoral integrity. This is especially true where there are indications that their algorithms may be influencing the direction of political discourse during an election period.

X amplified several posts containing hate

Some of the posts that X showed to us on the For You feed contained content which we believe is prohibited by the platform, or that should not be actively promoted by a platform’s algorithms.

One post contained an image of a river of blood running through the streets and suggested that all migrants from certain countries should be treated as a suspect and surveilled. This post would appear to breach X’s policies which say they prohibit spreading fearful stereotypes about people of a certain nationality, religion or ethnicity, including saying that they “are more likely to take part in dangerous or illegal activities.”

We reported this post to X. They responded that the report was being reviewed, but no further response had been received, and the post was still live on the platform at the time of writing.

One post came from an account that posted severely anti-Semitic content, including Holocaust denial. Another post in our For You feed appeared to be trying to mock a politician by superimposing their head onto a disabled TV show character.

TikTok amplified a pro-AfD advert that breaches their own rules

On all nine test accounts, we were only shown one advert – it was a paid partnership post supporting AfD on TikTok which has been viewed more than 600,000 times. TikTok bans all political and issue-based adverts, including in paid promotions as well as direct adverts.

It is concerning not only that TikTok fails to fully enforce its ban on political advertising, but that their content recommendation algorithms promoted content that breach its own policies. After we alerted the company to the paid partnership post supporting AfD, TikTok removed it.

How did the companies respond to our findings?

We wrote to X and TikTok to give them the opportunity to comment on the investigation’s results and analysis. X did not respond. TikTok said the methodology did not reflect the experience of other accounts that interact with a variety of topics.

The company said the For You feed is intended to be unique to each user and suggested other factors that influence what content a user sees, as described on their website. This includes, for example, the videos the user has recently interacted with, how others interact with content, and the time the video was posted.

They said TikTok has shown a strong commitment to election integrity in Germany, providing a resource for reliable information on the app, and removing harmful content among other measures aimed at strengthening the platform.

TikTok also said while TikTok did not receive remuneration for the specific political advert that we shared, it had been removed for violating TikTok’ branded content policy.

Additionally, TikTok said the platform does not favour any particular political candidate or party, which we do not dispute. We do not allege that TikTok deliberately preference AfD content over that of other political parties. Our point is that their algorithms have nevertheless produced such a result.

What do our findings mean and what should happen as a result?

The EU’s Digital Services Act requires very large online platforms, which includes all those investigated here, to identify and mitigate any risks that they might pose to electoral processes. This must include any risks posed by their algorithmic systems.

Indeed, content recommendation algorithms on X and TikTok have come under scrutiny recently.

Politicisation of X

The EU has already announced a wide-ranging investigation into X, recently expanded to include the design of its recommender systems. The platform is required to tell the regulator about any past alterations to the algorithm by 15 February – information that could reveal whether the Weidel livestream got a boost.

The owner of X, Elon Musk has come out in support of AfD and in January, he interviewed the leader of AfD, Alice Weidel, live on the platform. During this interview, Musk did not disagree when Weidel falsely claimed that Adolf Hitler was a “communist”.

At the same time, the EU said it would monitor whether X's algorithms promoted the interview in a way that would give AfD an unfair advantage in the election.

TikTok’s recent record

In December 2024, the Romanian presidential election was cancelled amid warnings of Russian influence via TikTok after the little-known ultranationalist candidate Călin Georgescu won the first round.

Georgescu claimed to have spent "nothing" on his campaign but gained a significant TikTok presence, opening up questions of possible manipulation of the platform to boost his national profile.

Our own investigation found that TikTok recommended an average of eight times as much content supporting the far-right Georgescu as his opponent to new users who showed an interest in both candidates.

The EU announced that it was investigating the platform to see if it had sufficiently dealt with the risk that its recommender systems might be manipulated in a way that undermined the integrity of the election.

Why does algorithmic bias matter to democracy?

Social media algorithms are designed to boost engaging content that will get more people to watch and click, which will make the platform more money from advertisers.

But the same algorithmic systems that are designed to keep users engaged could also end up promoting one political party, which poses risks to democracy.

Platforms make deliberate choices when designing the recommender systems behind their algorithmically curated "For You" feeds. They therefore must bear responsibility for what they are choosing to serve. This is especially relevant when choosing to serve content that does not come from accounts that a user has themselves chosen to follow.

We believe that the disproportionate prevalence of right-leaning and pro-AfD content on TikTok and X shows the risks that these platforms’ algorithms pose to democracy. We call on the European Commission to look into possible political bias in the content recommendation algorithms of X and TikTok.

Methodology

For each of the three platforms, X, TikTok and Instagram, we set up new accounts from Germany. We did this on a computer for X and Instagram and on a phone for TikTok. The computer browser was cleared of any cookies and history before each test, and the phone was factory-reset before each test.

Each account showed an interest in German politics, with as far as possible an equal interest in all the four biggest political parties. We did this by having all of the nine accounts:

1) Click "follow" on the four biggest political parties (CDU, SPD, AfD, the Greens) and their leaders (Scholz, Merz, Weidel, Habeck)

2) Click on the top five posts from each of the above accounts. If the posts were videos, we watched for at least 30 seconds. If the posts were threads, we scrolled through the whole thread.

3) We then went to the algorithmically curated "For You" feed. We spent 15 minutes per platform for each of the three test accounts watching the content we were shown. Whenever we were shown content about German politics, we interacted with it. If the post was a thread or contained multiple images, we scrolled through all the contents. If it was a video, we watched it for at least 30 seconds.

- We manually classified the content we were shown according to:

- Whether the post came from one of the eight accounts we followed

- Whether the post supported or opposed a German political party

- Whether the post was left-leaning or right-leaning

- Whether the post was an advert

We use the term “party political” to describe posts that express support for a German political party.

We use the term “left-leaning” to describe posts from the SPD, the Greens or the Left party, posts supportive of or featuring one of those parties or figures and posts that are not party political but support left-leaning ideas, from centre-left to far-left. This included a small number of posts were shown about non-German politics (principally US politics).

We use the term “right-leaning” to describe posts from the CDU, CSU, FDP, AfD, posts supportive of or featuring one of those parties or figures and posts that are not party political but support right-leaning ideas, from centre-right to far-right. This included a small number of posts were shown about non-German politics (principally US politics).

We thank a German consultant for their support on this research.

This piece was updated on 27 March 2025. The following line was added to the text: "We reviewed how much party political content supportive or opposed to one of Germany’s four major political parties was shown." The caption of the chart showing criticism and support for the four parties on X and TikTok was expanded to clarify that some of the individual posts included were critical or supportive of more than one major party, and that we have only included numbers of posts expressing critique or support for the four major parties.