With days to go before Poland’s presidential election runoff on June 1, Global Witness finds that TikTok’s algorithm is feeding new, politically balanced users twice as much far-right and nationalist right content as centrist and left-wing content

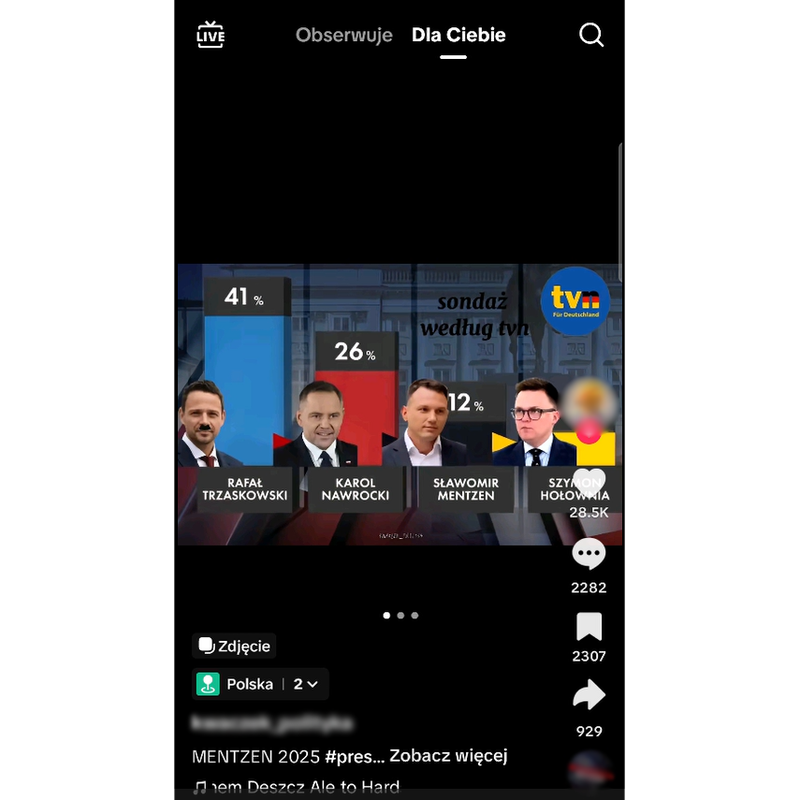

The investigation reveals a pronounced skew in the content promoting the two presidential candidates. TikTok showed our test accounts five times more content supporting nationalist right candidate Karol Nawrocki as centrist candidate Rafal Trzaskowski.

This is despite the fact that at time of testing, the centrist candidate’s official TikTok account was more popular than his opponent’s with 12,000 more followers and nearly 1 million more likes.

In the first round of the election, Trzaskowski came top with 31.3% of the vote while Nawrocki received 29.5%.

Our findings come just two weeks after a Global Witness investigation in Romania found TikTok’s algorithm was serving nearly three times as much far-right content as all other political content, and similar tests around previous elections in Germany and Romania suggesting TikTok’s algorithm pushes users towards far-right content.

TikTok is currently under investigation by the European Commission for its handling of election risks, with particular reference to the annulled Romania election in late 2024.

Our results suggest TikTok has not taken sufficient action to prevent its platform prioritising right-wing content and again risks undermining the integrity of a national election.

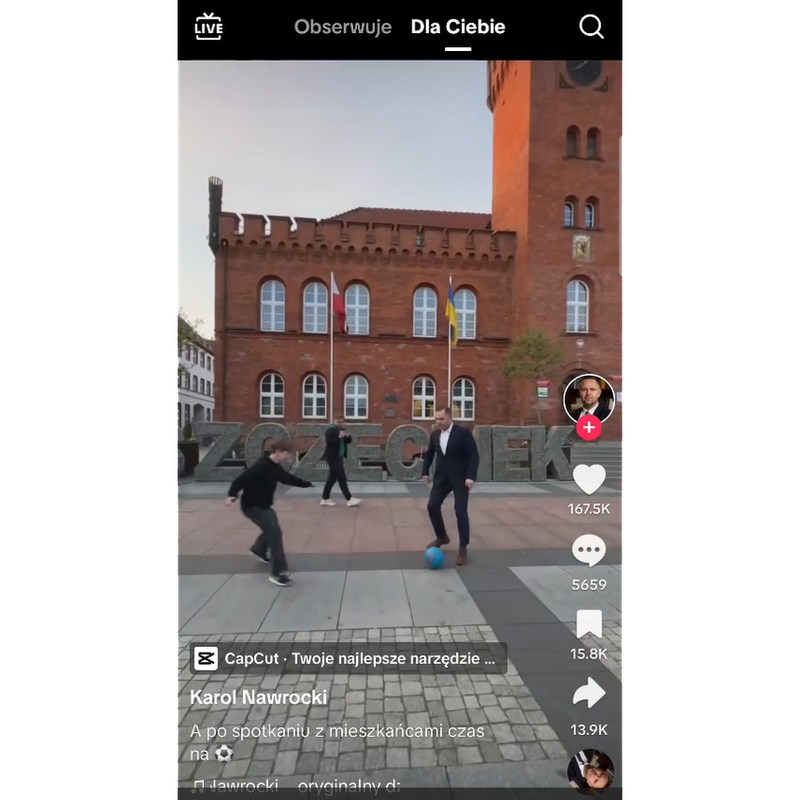

TikTok’s algorithm served more than five times as much content supporting nationalist right candidate Nawrocki as his centrist opponent Trzaskowski to our notional new, politically balanced users. This post of him playing football with children, by an account labelled Karol Nawrocki, but not the official account that we followed, was shown to all three of our users

Tracking TikTok’s recommendations

Despite not having access to TikTok’s algorithm itself, which the company guards closely, external researchers can still assess the algorithm’s behaviour by examining the posts and patterns of content it chooses to serve to users.

In this instance, we wanted to find out what an undecided voter in Poland might see if they signed up for the platform ahead of the election runoff. So we created TikTok accounts that signalled equal interest in the two presidential candidates.

We did this for three users of different ages – from a Gen Z first-time voter to someone in their 40s – with names commonly associated with different genders and then looked at what content the algorithm showed each of them on the For You page.

The results were striking. For three accounts, viewed for 10 minutes each, we were served more than five times as much content supporting nationalist right candidate Nawrocki than centrist candidate Trzaskowski, the Warsaw mayor who came top in the first round of the election on 18 May.

When we also accounted for posts that explicitly opposed each candidate, we found a net total of sentiment that diametrically diverged for the two candidates, as shown in the graph below.

The app recommended 73 posts containing political content (out of a total of 112 posts), of which it was possible to determine the political leaning of 61 posts.

Of these 61 posts, two-thirds (67%) promoted or platformed far-right or nationalist right views or people and one-third (33%) promoted or platformed centrist and left-wing political views. This is twice as much hard right content as all other political content.

We were shown almost four times more nationalist right content (aligning with candidate Nawrocki, who is backed by the Law and Justice (PiS) party) as centrist content (the political alignment of Trzaskowski, a member of Donald Tusk's Civic Platform (PO) party).

All of the political posts we were shown were about Polish politics.

The only video to show up in the feeds of all three of our accounts was of Nawrocki playing football with children. This is not obviously sensational, but may be an unlikely and engaging scene, contributing to its popularity (at time of writing it has 2.7 million views).

We were also shown two posts containing antisemitic content from far-right accounts, which we reported to TikTok, but, to date, they have not acted on.

Separating signal and noise

In order to try to show an equal interest in the two presidential candidates, we seeded each account in the following way:

- We searched for the official accounts of the two remaining presidential candidates Rafal Trzaskowski and Karol Nawrocki, and no other accounts.

- We clicked on their account and clicked “follow”.

- We watched five minutes of posts from both candidates.

- In setting up the user accounts, we alternated between searching for Trzaskowski first and for Nawrocki first.

- We watched all posts in the For You page that were political in any way, skipped past posts that were not political, and analysed the content.

TikTok has stated that it draws on many signals to shape what content it recommends, such as videos recently interacted with, amount of time spent viewing a video, how others interact with content, the time and region in which the video was posted, and video language settings.

However, TikTok has not shared exactly how these signals work or how they affect recommendations, nor made all of these datapoints publicly available for analysis. Without access to this information, we focused on the variables we are able to monitor and worked to keep all other elements as consistent as possible across the tests.

The accounts we created were all real accounts being shown real content by the platform.

We gave TikTok the opportunity to comment on these findings. They said that our investigation was unscientific, misleading and the conclusions drawn from it were wrong.

In particular, they said that engaging narrowly on a single topic does not reflect a typical user’s experience and that our interactions with the platform such as skipping posts not related to politics will have skewed the results.

They stated that they are committed to upholding election integrity on TikTok and are cooperating fully with the European Commission’s investigation into its role in the 2024 Romania election, and provide more information on their recommender system on their website.

Our new, politically balanced TikTok users were often shown posts that could be read as opposed to the centrist candidate Trzaskowski, such as this post which appears to give him a Hitler moustache and promotes far-right candidate Sławomir Mentzen

What if the user doesn’t engage with the presidential accounts first?

We wanted to see if seeding the account by clicking follow and watching content from both of the political candidates before seeing what TikTok's algorithm showed us on the For You page had much of an effect. So we did a fourth replica of the experiment.

This time, we opened a new account on a clean phone, and went straight to the For You page. We watched for 10 minutes, skipping past any content that wasn't political, as with the other tests. We skipped past close to a dozen non-political posts before encountering the first piece of political content, and the feed became focused on political content a few dozen posts later.

As before, there was a bias in the content that it showed us. Of the 11 posts that promoted a political view or platformed a politician, 82% supported far-right or nationalist right political views, 18% supported centrist views and none supported the left.

And also as before, there was a bias in the support shown for each of the two presidential candidates. Nawrocki had one more post supporting him than opposing him (four supportive, three opposed), whereas the centrist Trzaskowski had three more posts opposing him than supporting (two supportive, five opposed).

While these results are only from one test, they give a flavour of what a new TikTok user in Poland who follows no-one but is interested in the upcoming election could be shown.

The suggestions of TikTok’s algorithm

Social media companies design content recommendation systems to hold users’ attention. As we’ve made clear previously, our investigation does not allege that TikTok’s algorithms have deliberately been designed to preference right-wing content over other political content.

The issue may lie in the nature of this content – hard right content can be more provocative or outrageous, and can therefore get more engagement than more centrist content.

Nevertheless, the algorithm’s design to amplify engaging content can lead to the disproportionate boosting of some political views over others, or open it to external manipulation, both of which pose risks to civic discourse and democracy.

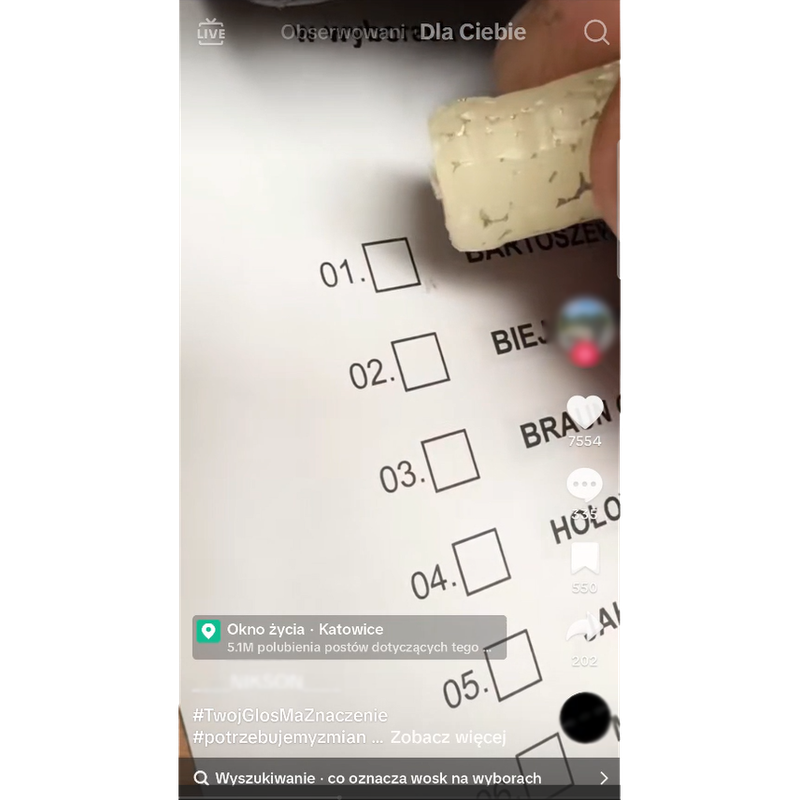

A post that TikTok showed us of someone voting for far-right candidate Grzegorz Braun while putting wax on the ballot squares of other candidates. Provocative content of this kind is more likely to result in user engagement and amplification by platform algorithms

Next steps for safeguarding election integrity

Under the EU’s Digital Services Act, Big Tech platforms are responsible for addressing the dangers their services pose to our elections. As we’ve observed in multiple elections over the past six months, TikTok’s recommender system consistently pushed more right-wing content to new users equally interested in all candidates or parties.

TikTok should review why this keeps happening and share more information as to how the other signals the algorithm uses skew the political balance of posts in the For You page. It should also facilitate external researchers’ access to data on its recommender systems, to bolster public interest research into the influence and effects of its platform.

With another investigation finding similar patterns, we call on the European Commission to continue examining possible political bias in TikTok’s content recommendation algorithms and related risks.

Our methodology in more detail

- We set up three new TikTok accounts over two days, 20 and 21 May 2025, in Warsaw, Poland.

- Before setting up each new TikTok account, we first reset the phone to its factory settings so that the device did not have any previous usage history that might influence what posts TikTok’s algorithm showed us.

- We seeded each account by engaging with the accounts of the two presidential candidates. For each of the two candidates, we searched for the candidate’s official TikTok account, went to the candidate’s page and clicked “follow”, then spent five minutes watching content posted by the candidate.

- We then went to the app’s For You page, TikTok’s page that recommends content to users. TikTok says recommendations are “based on several factors”, including “interests and engagement.”

- For any post that featured political content, we lingered on the images or video.

- For any other recommended content, we swiftly skipped past it by swiping on to the next post.

- After spending 10 minutes on the For You page, we stopped and reviewed all the content shown.

- In light of previous tests and feedback from TikTok, we additionally created a fourth account following the steps above except for step 3.

- We manually categorised all the political posts shown to us into five brackets (far-right; right; centrist; left; not possible to determine) according to the political views expressed or the people platformed. We labelled posts supportive of Slawomir Mentzen or Grzegorz Braun or their parties or views as far-right; those supportive of Karol Nawrocki or his party or views as nationalist right; those supportive of Rafal Trzaskowski or Szymon Holownia or their parties or views as centrist, and those supportive of Adrian Zandberg or Magdalena Biejat or their parties or views as left-wing. We also recorded all posts that were supportive or opposed to either of the two remaining presidential candidates.