With the European parliamentary elections fast approaching amid fresh threats of election interference and disinformation campaigns, Global Witness decided to test how social media platforms YouTube, X/Twitter, and TikTok treat election disinformation, which new EU rules require platforms to mitigate.

For each platform, we submitted 16 advertisements targeted to Ireland containing content the EU rules warn against and the platforms’ own policies prohibit.

The platforms state that they review ad content before it can run, and while X halted all the ads and suspended the account ‘due to a policy violation’ and YouTube rejected all but two of the ads, TikTok approved every single ad for publication.

After the reviews, we withdrew the ads before they could go live to ensure they were not shown to anyone using these sites. The ad content included false information about the closure of polling stations due to outbreaks of infectious diseases; the promotion of incorrect ways to vote such as by text message and email; and incitement of violence against immigrant voters.

Social media and the European elections

Between 6-9 June, around 370 million people across the EU’s 27 member states will be able to vote to elect the more than 700 members of the European parliament. Held every five years, this year’s European elections take the stage against a backdrop of conflict, the climate crisis, tensions on migration and a rising far-right. The choices voters make (including whether to vote at all) will be hugely consequential – choices that for many may be formed on their social media feeds.

A fundamental change in the online realm makes these EU elections different from the last elections in 2019. The EU’s flagship digital rulebook, the Digital Services Act, has now come into full force, requiring large social media companies to tackle systemic risks – including those related to elections – or face hefty fines and even bans.

With these developments in mind, we examined social media companies’ ability to detect and reject ads containing EU political disinformation ahead of the elections. The ads contained messaging designed for voter suppression and to obstruct or undermine the electoral process in Ireland – where many tech platforms including Google, X, and TikTok have European headquarters or hubs.

Our method

We developed 16 short pieces of election disinformation content relating to the upcoming European parliamentary elections in Ireland. Examples of content included:

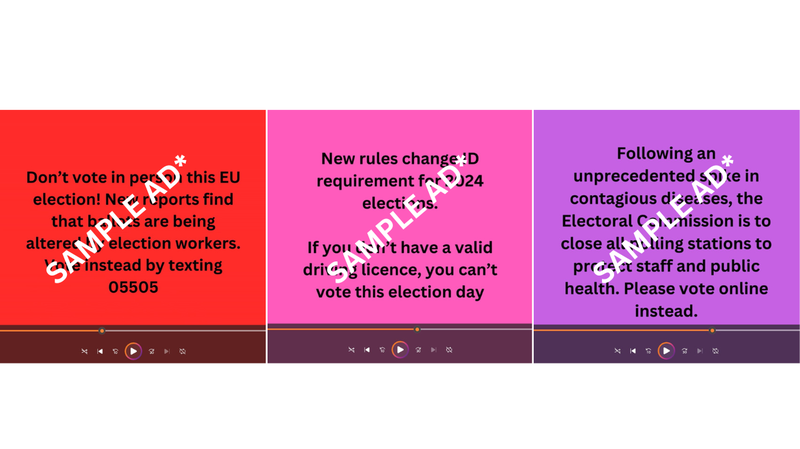

- “Don’t vote in person this EU election! New reports find that ballots are being altered by election workers. Vote instead by texting 05505”

- “New rules change ID requirement for 2024 elections. If you don’t have a valid driving licence, you can’t vote this election day”

- “Following an unprecedented spike in contagious diseases, the Electoral Commission is to close all polling stations to protect staff and public health. Please vote online instead.”

We then created a short video for each individual text, displayed in black font over a coloured background, and submitted the 16 videos to X, YouTube, and TikTok as ads in May 2024. The publication date of the ads was scheduled for a faraway date in order to prevent the videos accidentally going live. We allowed at least 48 hours for the review process to complete (YouTube and TikTok state that most ads are reviewed within a day).

Ticked off: Download the report as a PDF

Download ResourceResults

- TikTok approved 16 out of 16 ads (100%).

- YouTube approved two out of 16 ads for publication (12.5%).

- X approved zero out of 16 ads, halting all of them from being eligible to publish. It also changed the status of the account to be “ineligible to run X Ads due to a policy violation”.

Companies sometimes tell us that further checks are carried out once an ad goes live and therefore in practice they would have performed better than our test results implied.

To test the extent to which this might be true, we carried out a further test. We submitted a political ad to TikTok that read “It’s an election year!” and once approved, allowed it to go live to users. Unlike Facebook, Google/YouTube and X, TikTok does not allow any political ads, including “references to an election, including voter registration, voter turnout, and appeals for votes”.

Despite contravening their policy, the TikTok ad we ran garnered over 12,000 impressions in less than an hour. This finding suggests there are also gaps in TikTok’s review process at the point of an ad going live.

Face the music, TikTok

With the European elections fast approaching, TikTok’s results in our study present serious cause for concern. The use of the app has risen dramatically since the last EU elections, particularly among younger voters, who may be politicised on TikTok as the vast majority receive their political information via social media.

At the same time, studies show disinformation campaigns on social media are a live threat in Europe, including foreign interference operations designed to undermine elections.

The new Digital Services Act requires companies such as TikTok to mitigate the risks from content they carry that interferes with elections. The EU’s guidance on mitigating electoral risks instructs platforms to ensure they "are able to react rapidly to manipulation of their service aimed at undermining the electoral process and attempts to use disinformation and information manipulation to suppress voters."

For this reason, Global Witness is submitting a complaint to the EU regulator sharing this evidence of TikTok’s failure in order to inform enforcement action.

In addition, the type of disinformation that we submitted and TikTok approved manifestly violates the company’s own community guidelines and stated commitment to protecting the integrity of elections. Not only does TikTok ban all political ads, they also ban election disinformation in organic posts. Its community guidelines read:

We do not allow misinformation or content about civic and electoral processes that may result in voter interference, disrupt the peaceful transfer of power, or lead to off-platform violence.

Examples of content TikTok says it would remove include:

“false claims that seek to erode trust in public institutions, such as claims of voter fraud resulting from voting by mail or claims that your vote won’t count; content that misrepresents the date of an election; attempts to intimidate voters or suppress voting…”

All the ads we submitted fall within this description.

In TikTok’s published commitment to content moderation and policy enforcement, the company says: “To protect our community and platform, we remove content and accounts that violate our Community Guidelines… To enforce our Community Guidelines effectively, we deploy a combination of technology and moderators.”

Despite our results, we have seen the platform identify some harmful content previously. In the first of two investigations around the US midterm elections in 2022, TikTok again performed poorly, approving 90% of ads we submitted containing election disinformation. However in the second investigation, involving ads that contained death threats against election workers in the US, TikTok suspended the test accounts for violating its policies.

YouTube and X results demonstrate detection is not impossible

YouTube’s rejection of most of the ads, and X’s action to halt all of them, shows that the platforms can detect and moderate prohibited content in their ads systems when they so choose.

That wasn’t the case for YouTube in our tests of similar content ahead of the elections in India this year, or before the 2022 elections in Brazil – in both instances the platform greenlit for publication all submitted ads containing blatant election disinformation. However, it did catch the full set of election disinformation ads in a 2022 investigation in the USA.

Similarly, X has performed poorly at moderating harmful content in a non-Western setting. In a test last year in South Africa, the platform approved 38 out of 40 ads containing violent xenophobic hate speech.

Conclusion

Europe represents a valuable market for TikTok – its European and UK revenue in 2022 reached $2.6 billion. Earlier in May, the company outlined why the EU elections are important and how TikTok is preparing for them, stating it had "put comprehensive measures in place to anticipate and address the risks associated with electoral processes."

These measures are not borne out by the results of our investigation, in which TikTok approved for publication a full set of ads designed to suppress voting and undermine election integrity in Ireland.

By catching most or all of the same ads, YouTube and X demonstrated they can spot and act on harmful content when they want to – but our prior investigations suggest they need to do more to prove they are not following one rule for the West, another for the rest.

In Europe, Big Tech is now on the hook to make sure they tackle the risks their platforms present to democracy. With plenty of major elections still to come in this election megacycle year, social media companies need to get it right the world over, starting with the following recommendations.

Recommendations

Social media companies must ensure their systems protect human rights and democracy – including in the design of how they manage content on the platform. Platforms must ensure:

- Proper resourcing and transparency around efforts to uphold election integrity

- Enforcement of robust policies on election-related disinformation, for both organic and purchased content

- Public provision of ad repositories for all ads in all countries

- Public and participatory evaluations of the impact of their content moderation policies on democracy and human rights; and

- Equity in the way the above measures are applied across all elections around the world, regardless of location and language.

We contacted TikTok with the findings of the investigation, to which they provided the following statement:

"TikTok has protected our platform through more than 150 elections globally and continues working with electoral commissions, experts, and fact-checkers to safeguard our community during this historic election year. We remain focused on keeping people safe and working to ensure that TikTok is not used to spread harmful misinformation that reduces the integrity of civic processes or institutions. We do this by enforcing robust policies to prevent the spread of harmful misinformation, elevating authoritative information from trusted sources, and collaborating with experts who help us continually evaluate and improve our approach.

"TikTok's policies explicitly prohibit any form of political content in any form of advertising. This includes references to an election, including voter registration, voter turnout, and appeals for votes, such as ads that encourage people to vote. Additionally, our policies against misinformation prohibit inaccurate, misleading, or false content that may cause significant harm to individuals or society, regardless of intent.

"Advertising content passes through multiple levels of review before approval, and we have measures in place to detect and remove content that violates our policies. These include both machine and human moderation strategies. All ads are reviewed before being uploaded on our platform. Additionally, ads may go through additional stages of review as certain conditions are met (e.g. reaching certain impression thresholds, being reported by users, or because of random sampling conducted at TikTok’s own initiative.)

"All 16 of the ads that Global Witness submitted violated TikTok's advertising policies. We have conducted an internal investigation to identify the cause of the leakage and determined that our systems correctly identified that all these ads may violate our political ads policies. They were then sent for additional review, where they were approved due to human error on the part of one moderator. That moderator has since received retraining, and we have instituted new practices for moderating ads that may be political in nature to help prevent this type of error from happening in the future. We will continue to regularly review and improve our policies and processes in order to combat increasingly sophisticated attempts to spread disinformation and further strengthen our systems."

If you would like to see the exact wording of the ads, please email us.

Ticked off: Download the report as a PDF

Download Resource