Bot-like accounts have spread conspiracy theories about climate cults and a global elite, amplifying disinformation on social media

Bot-like accounts that have actively participated in political discussions around key events are consistently sharing climate disinformation and using it to foment fear, hate and mistrust against proponents of climate action.

Earlier this year, we published an investigation into 45 bot-like accounts on X which have amplified divisive political content since the UK General Election, including “Great Replacement” conspiracy material, and responded to global events with racism, disinformation and conspiracies.

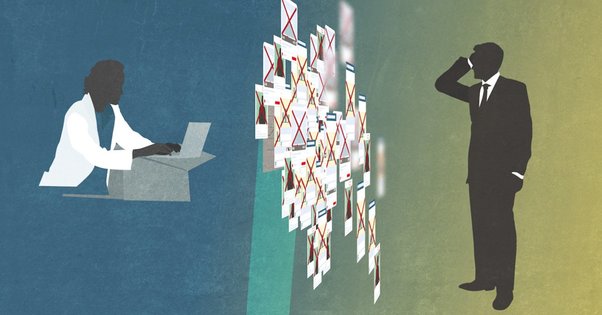

As we explained in that investigation, accounts that exhibited bot-like behaviour on X had been identified based on a methodology which included looking for a series of “red flags”, such as prolific posting, a low volume of original content and indications that the account was set up quickly in order to respond to an event.

This methodology also included a manual search to identify any evidence of authentic human behaviour.

We found that a number of these accounts were amplifying climate denial and disinformation. In the period after the UK general election, 14 of these accounts shared the hashtag #ClimateScam, often in conjunction with other conspiracy hashtags such as #geoengineering.

In August 2024, we decided to do a deeper dive into the climate discussion and climate disinformation shared by these accounts.

We set out our findings from this investigation below, which has not involved a further assessment of the status of the accounts since they were identified as “bot-like” in July 2024.

Explicit climate denial and disinformation amplified by bot-like accounts

Within mentions of “climate change”, the most frequently used hashtag (amongst these accounts) was an explicitly climate denialist hashtag: #climatescam.

Climate conspiracism and denial were also present, including hashtags like #netzeroscam, #climatecult, #climatechangecult and #bladerunners (a hashtag that refers to people who attack cameras installed to enforce low-emissions traffic).

Twenty-two of the 45 accounts we looked at shared content that referenced either a climate cult or climate scam.

As well as denying that climate change is an existential threat, some of these accounts took things a step further by spreading conspiracy theories that the real existential threat is those who support climate action.

Claim 1: Climate action threatens the natural world and human life

Bot-like accounts amplified the idea that climate action is dangerous. This included highlighting or claiming that significant environmental damage and destruction has or will occur through the introduction of solar panels or windfarms.

This was portrayed in some cases as a “sacrifice” demanded by the “cult” of climate action.

Accounts also amplified more extreme claims that the purpose of the climate “cult” is to deliberately reduce people’s quality of life or that “geoengineering” is deliberately designed to make people sick so that we don’t protest at our freedoms being curtailed.

Claim 2: Climate action is a cover-up by elites profiting and governments controlling their populations

Some of the accounts also claimed that policies to tackle the climate emergency are simply a means to obtain money and power, or to increase control over people, including by making the population sick and weak.

Accounts called for resistance to environmental policies. There was discussion of and support for the “blade runners”, who vandalise ULEZ cameras as a form of protest against the alleged surveillance and control they introduce or represent.

Climate discussion is closely tied to political identity

The bot-like accounts we looked at were not explicitly dedicated to discussions of climate.

Accounts mentioned “climate” or “climate change” relatively infrequently (the 45 bot-like accounts mentioned “climate” 3,547 times, including 1,492 mentions of “climate change” out of over 600,000 posts between 22 May and 22 July).

However, all of the accounts mentioned “climate change” at least once during this period, and it was consistently present in the discussion over time.

Posts shared by these accounts often declared political stances on climate in tandem with other positions, such as being against climate action and LGBTQIA+ rights, against vaccination or against involvement in conflicts such as Ukraine.

Conversely, being supportive of climate action, LGBTQIA+ rights, migration, vaccination or Ukraine was seen by some accounts as one political identity – that of “globalists” or “far left fascists”.

But not all of the bot-like accounts were opposed to climate action. Some accounts posted in support of climate or ecological action.

Accounts that mentioned climate terms in their bios generally did so to express support for climate action, naming the climate or environment alongside other traditionally left-wing political views, such as supporting Labour, disliking the Tories or rejoining the EU.

How do the identified bot-like accounts interact with the wider information environment?

The accounts we investigated did not exist in a silo. They frequently shared content that links to external websites or elsewhere on X.

These sites included a news site known to have shared false health and climate information.

A media site known to promote conspiracy theories was the most retweeted account among the posts mentioning climate change.

We also found other users interacting with the bot-like accounts. Sixty-four other accounts, who themselves had more than 300,000 followers collectively, mentioned the bot-like accounts when posting about climate change.

There was also some evidence of connections between one of our bot-like accounts and other profiles that amplified their content, indicating that they may have been set up for the same purpose.

Two accounts retweeted one of the bot-like accounts with whom they appeared to share a name, and one of their profiles linked to an Instagram account whose profile image was the same as the bot-like account we had identified.

Recommendations

Tackling the climate emergency requires spaces for democratic discussion and civic action free from distortion.

As accounts are allowed to exploit political divisions in order to drive extreme conspiratorial narratives about climate action, building genuine consensus on the way forward remains a significant challenge.

Social media platforms (by their very design) can be exploited to drive divisive and harmful content. They must take responsibility for mitigating risks from their platforms to information integrity, as affirmed in the Digital Services Act.

Major social media platforms recognise the dangers from harmful bots and have policies that ban them.

X’s policies state that users may not “artificially amplify […] information or engage in behaviour that manipulates or disrupts people’s experience” and that users that violate this policy may have the visibility of their posts limited and, in severe cases, their accounts suspended.

Unfortunately, these policies are not always adequately enforced. We called on X to investigate whether the list of bot-like accounts that we have provided to them violate their policies and to invest more in protecting our democratic discourse from manipulation.

On the third attempt to seek their comments on our investigations into bot-like behaviour, X responded saying they "have reviewed the accounts you have shared, and there is no evidence they are engaged in platform manipulation."

They stated that our "methodology relies on limited data and is incorrectly classifying real people as bots."

In subsequent discussions with Global Witness, a representative from X reasserted that our methodology was based on limited data and identified humans as bot-like.

However, when we asked how they were able to determine that some of these specific accounts were run by real people, X did not provide this information.

X were also unable to confirm how many of the bot-like accounts we had identified were, in the view of X, real humans.

Given that there is only limited data available to researchers outside of the platform, and that platforms like X and Meta continue to make less and less data publicly available, investigations like ours are forced to rely on methodologies that allow us to raise concerns about accounts without having 100% certainty on their authenticity (as we have made clear).

As a result, we classified these accounts as appearing like bots, or being "bot-like".

Without legislation in place requiring greater transparency from platforms, researchers and members of the public are left with no option but to choose whether or not to trust what X says.

As noted above, the “bot-like” accounts investigated in this article were identified in July 2024 based on a methodology involving a series of “red flags” and a manual review, which has not been reperformed since our initial investigation.